I would wager that, at any given moment, the majority of aid workers in the world are doing the exact same thing. We like to imagine aid workers out in the field, but that’s not the true focus of activities. Instead, most aid workers are hunched over their computers, trying to figure out a way to measure their impact. How do we know if what we’re doing makes a difference? And, equally important – can we capture this on an Excel spreadsheet?

We spend hours, weeks, months, trying to measure our impact – donors demand that we do so, yet it also satisfies our own need for reassurance. We pay lip service to the belief that impact measurement is difficult, but we rarely question whether it is actually possible. Perhaps there lies an existential fear – if we can’t measure our impact, than are we having any at all?

Yet the world doesn’t work this way. Causality is simply too complex, no matter what domestic or international issue we are trying to address. No logframe or theory of change is capable of capturing the full interplay of factors that determine why things are as they are, much less how and why they change.

As Primo Levi writes in The Drowned and the Saved: “Without a profound simplification the world around us would be an infinite, undefined tangle that would defy our ability to orient ourselves and decide upon our actions … We are compelled to reduce the knowable to a schema.”

These schema are sufficient to allow us to navigate our everyday lives, yet they are far too imprecise to draw anything but the broadest generalisations when it comes to the best way to address social issues, much less measure discrete impact. We can, at best, see through a glass, darkly.

Our logframes are fictions – necessary fictions, but fictions nonetheless. This is, perhaps, the fundamental way in which development differs from the private sector and scientific endeavors. Both are artificially bounded systems, in which it is possible to trace causality with some degree of certainty. At the simplest level, for instance, company-level success is a function of profits and losses – can you sell goods and services for more than they cost to produce?

There’s no way to limit the variables in play when it comes to, say, improving education or health outcomes, or the attempt to end genocide. The closest approach that we have are randomized control trials, yet proving replicability across countries and time remains a significant – and prohibitively expensive – challenge.

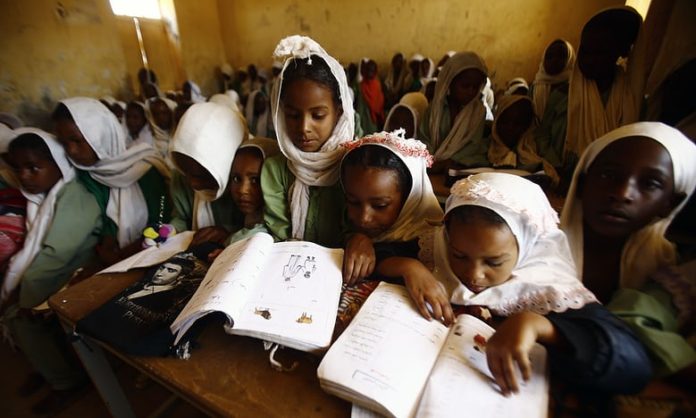

Did your programme to increase female enrolment in secondary schools succeed because of the tutoring and mentorship you offered, or because family incomes were rising, allowing parents to pay the school fees? Did your project to reduce maternal mortality fail to show results because your interventions were flawed, or because the region where you worked was an opposition stronghold, subject to retaliation from the government? Did American or British advocacy around Darfur influence the Sudanese government to try to make the situation better, or worse?

Hannah Arendt explained the problem in her book The Human Condition: “Every deed and every new beginning falls into an already existing web, where it nevertheless somehow starts a new process that will affect many others even beyond those with whom the agent comes into direct contact … The smallest act in the most limited circumstances bears the seed of the same boundlessness and unpredictability; one deed, one gesture, one word may suffice to change every constellation. In acting, in contradistinction to working, it is indeed true that we can really never know what we are doing.”

This is not a nihilistic call for inaction. Quite the opposite. Our obsession with measuring impact has, paradoxically, paralysed us. We focus on those interventions that promise to produce measurable results. We are like the drunk looking for our car keys under the street lamp – not because that’s where we lost them, but because that’s where the light is.

Rigour becomes an excuse for complacency, if not cowardice. The focus on measuring impact limits our willingness to take risks. We must balance a desire for certainty against the knowledge that our ability to measure impact will always be, at best, limited.

Development is not a science – it is a struggle to try to improve the human condition, but a struggle in which we are denied the ultimate reassurance of knowing whether we were successful, or not.

This is, of course, also personal. I certainly cannot claim any lasting successes in my career. Plenty of projects failed, or magically turned into lessons learned. Some succeeded within our narrow frame of reference – especially if we measure success as the number of trainings held, or capacity built. And then I moved on to the next. I am a cynic, but I have yet to despair, or fully despair. I do not know whether anything I’ve done has made a difference – which is different than believing that it has not.

I try to find some comfort in this distinction.

International development – any development endeavour that hopes, eventually, to win an important fight – must embrace this uncertainty. Otherwise, what are we doing?

Michael Kleinman is the founder of Orange Door Research. He previously worked for NGOs in Afghanistan, east and central Africa, and Iraq, as well as for foundations.

Source: Guardian